In this post I describe some of my thoughts about the parallels between systems engineering and software development. It’s a discussion piece, meant to help start and inform debate. Comments are very welcome.

New methods for developing systems and enterprise architectures.

Matthew Lindsay BEng Hons MIET AMIMechE

This discussion paper examines the parallels between the systems engineering discipline of architecture development and similar design aids in other engineering disciplines. It suggests that architecture development is more closely related to software engineering practices than it is to the development of mechanical artefacts. The paper then proposes the use of the Source Code Control System (SCCS) paradigm as of benefit to architecture development for architecture configuration control as well as for capturing Design Rational (DR). The paper then proposes that the software engineering method of Test Driven Development (TDD) could be applied to architecture development to bring increased focus for architecting activities (and hence efficiency increases) as well as providing a better method of architecture maturity assessment. Finally the paper proposes the quantification of architectural entities in a similar manner to “Source Lines of Code” (SLOC) within the software engineering domain to help estimation of cost for future architectural work.

The use of architectures for capturing information about system design is nothing new and indeed has been common practice for many organisations since the last 1980s [1]. Whilst there have been a great many developments, particularly in the use of Architecture Frameworks (AFs) to formalise and structure architecture development, many big issues still remain [2]. The issue of maturity assessment of architecture artefacts, the difficulties surrounding configuration control of highly dynamic, linked, parametric entities and the ongoing debates surrounding standardisation and reuse of architecture information all remain to be conclusively solved. Many of these problems are unique to architecture development but some of these issues have been encountered elsewhere, in other specialisms of Engineering.

Architecting as a practice sits quite cleanly in the systems engineering domain, principally because it touches on so many other disciplines. Most often, the architect will find themselves interacting with software engineers, hardware engineers, mechanical engineers, Subject Matter Experts (SMEs) as well as the usual project personnel (project managers, finance etc). When viewed from the perspective of engineering as a whole, its relatively recent emergence as a discipline and its tangential association with other fields in engineering, it might be assumed that it would have inherited operating procedures from those disciplines that surround it. Indeed, by its very nature, architecture should aggregate collective knowledge and provide value above and beyond the component entities. Unfortunately this doesn’t seem to be the case and many of the more recent practices, now commonplace in other fields have been overlooked, often without a suitable alternative.

Initial inspection would not offer any immediate parallels between developing architecture or developing, for example, a mechanical object, or a piece of code yet there are clearly equivalent themes that run through both and techniques that can be read across. Often the dilemma is in finding an equivalent paradigm for this mapping.

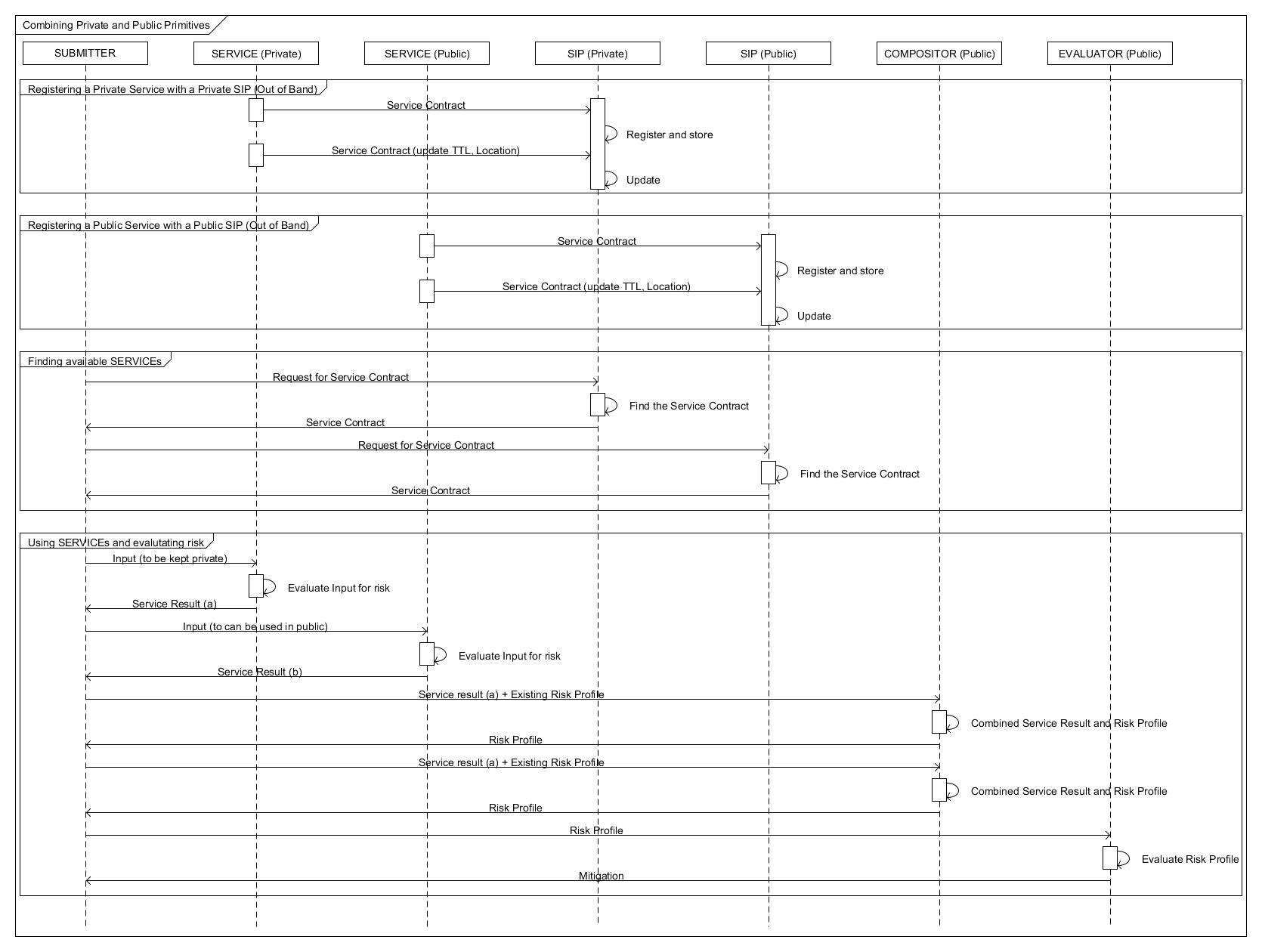

Architecture development is a highly dynamic activity, yet, requires the rigorous traceability of a production design akin to that of a design drawing for an automotive component or aerospace artefact. This is particularly true in high integrity applications such as defence or finance in which it is often used. In the mechanical engineering domain, this is managed (in the digital world) through the configuration control of digital assemblies with lockable parametric associations and access control to artefact parts. In previous years however, this would have been controlled through the physical “Drawing Office” and signed off by the Chief Engineer. In this process it is often slow and laborious to effect changes; often having to raise a Change Request and carry out impact analysis on the associated artefacts and ensuing analysis. Software development typically works on tighter design cycles and the relationship between discrete entities of code are better understood and often specified through rigorous interface definition specifications. Software engineers do not have to account for a legacy of paper based systems and poorly aligned toolsets. Even new mechanical “integrated CAE design suites” often lack the advanced CFD, FEA, DFM/A, costing analysis or other specialist tools required meaning that a longer “round trip” is required in the waterfall Engineering design approach. This is less of an issue when isolated to the mechanical world because typically the design/build cost ratio is skewed largely towards build and thus long round trips in the design cycle are still comparatively small (and cheap) compared to manufacture. This is not true of the software lifecycle where “build” is often in the order of minutes or hours whereas “design” is orders of magnitude larger [3]. Given this perspective, where should the systems engineering approach to architecture best align itself? Is developing an architecture more akin to developing software, or to developing a physical artefact? One might argue neither, yet the proposition exists that the rapid development cycles in software engineering is well aligned to the tight iterations of architecture development and that so many architectures are never physically instantiated that the design to build ratio is skewed in a similar manner to software development. Given this, it would be prudent to examine software best practice for a given scenario in architecture development. Take for instance Source Code Control System (SCCS) such as CVS, SVN or GIT. In this paradigm, local versions of the design (code) are stored in a repository and “checked in” to the primary model. Indeed this primary model could be many levels deep but most often, code is checked into the “whole entity”. When code is check in, it is validated against the build, deconflicted against new changes, the comments (or potentially “Design Rationale” (DR)) [4] captured and traceability maintained. Most also offer the options to “Branch”, “Merge” and “Fork” trunk builds to experiment and adapt the design to suite specific purposes or examine potential options in much the same way that an architect might build “as is”, “to be” and design proposition models,

The SCCS paradigm could be well harnessed in the development of an architecture. A local version of the model and appropriate views would be edited and changed entities “checked back in”, whilst simultaneously capturing at source, the associated DR for the changes and deconflicting it against the construct that already exists. Indeed, the action of identifying the affected views and entities (and entity relationships) upon “check in” would immediately flag up the effect of the changes and launch the analysis and deconfliction process. This could be coupled with a powerful “locking” mechanism on entities, relationships and views the to ensure integrity is maintained.

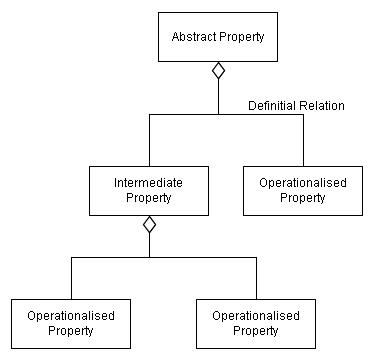

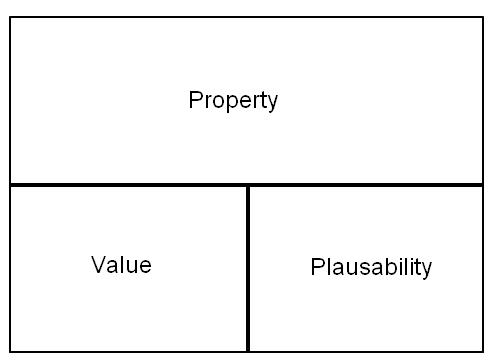

The power of SCCS might mot be the only principle that could be harnessed from the software engineering discipline. The issues related to maturity assessment of architectures are often ignored and dismissed as irrelevant on the assumption that the Architecture shall only be used as a discussion and analysis tool. Whilst it is true that an architecture tends not to be “executed” or “instantiated” in the literal manner (as code can be “compiled” or a design can be “manufactured”), its maturity is no less important, especially in architecture centric projects in which the information model contained might be the central location of the “Design”. Current approaches to maturity assessment tend to focus on either a qualitative approach such as the “Enterprise Scorecard” or “Architecture Tradeoff Analysis Method (ATAM) [5,6] or qualitative analysis of specific architecture products. Neither of these approaches are universally suitable for iterative architecture development and indeed the subjective nature of arbitrary architecture product assessment could be misleading when assessments are aggregated upwards rather than downwards (bottom up assessment of product maturity as opposed to top down assessment of the required products).

In recent years, one successful approach to software development has been that of “Test Driven Design” (TDD). In this methodology unit tests are written before any solution is attempted and the developer writes just enough code to be able to pass the test. On initial impressions, this methodology is often resisted because it requires a lot of “up front” effort before any problem solving can be undertaken. Once correctly implemented however, the methodology provides considerable benefits for software integrity, programmer efficiency as well as maturity assessment. The test harness serves as both a specification and an assessment framework. This paradigm could be well translated into the architectural domain. If the architecture team wrote “unit tests” for the architecture (i.e. decompose the architecture purpose into granulated questions that must be answered by individual or related products) then the purpose of architecture work would be well bounded into meeting that specification rather than “documenting the world”. Running the test harness each time updates are implemented would also serve as a trigger to alert architects when newly committed changes either break the integrity of the model or invalidate previously passed tests.

The third proposition for “methodology pull-through” is to relate the common practice of relating “Source Lines of Code” (SLOC) within software engineering to the quantification of architectural entities, relationships and views for the purposes of estimation. Whilst it certainly wouldn’t provide a de facto solution for estimating the cost of future architectural work, similar metrics could provide tangible estimation methods to project managers estimating for architecture centric projects.

This paper has discussed the parallels between the systems engineering based practice of architecting and the established practices in the Software Engineering discipline. It has proposed the value of the use of a “Source Code Control System” (SCCS) for the architectures as a way to control architecture development in terms of integrity, configuration control, revision control and capture of design rationale. In addition, this paper has discussed the potential benefits of “Test Driven Design” (TDD) for architecture development in order to help architects maintain architecture integrity, focus architectural effort better measure architecture maturity. Finally the paper proposes the quantification of architectural products as an analogous proposition to the measurement of “Source Lines of Code” in order to offer a tangible metric for the estimation of future architectural effort.

References.

[1] Zachman, J.A. “A Framework for Information Systems Architecture.” IBM Systems Journal, Volume 26, Number 3, 1987.

[2] Sessions, R “A Comparison of the Top Four Enterprise-Architecture Methodologies”. ObjectWatch, Inc, http://msdn.microsoft.com/en-us/library/bb466232.aspx

[3] Reeves, J W “Code as Design: Three Essays by Jack Reeves”, 1992, 2005 Developer.* Magazine http://www.developerdotstar.com/mag/articles/reeves_design_main.html

[4] Ali Babar, M and Gorton, G “A tool for Managing Software Architecture Knowledge” IEEE Computer Science, Second workshop on sharing and reusing architectural knowledge, rationale and design intent. 2007, IEEE.

[5] Schekkerman J, “Enterprise Architecture Scorecard” version 2.1, Institute for Enterprise Architecture Development http://www.enterprise-architecture.info

[6] Bass, L and Kazman, R “Architecture-Based Development”, April 1999, Carnegie Mellon Software Engineering Institute, CMSU/SEI-99-TR-007, ESC-TR-99-007